THANK YOU FOR SUBSCRIBING

Making Sense of Artificial Intelligence

Joe Zirilli, Vice President, Artificial Intelligence, Parsons

Joe Zirilli, Vice President, Artificial Intelligence, Parsons

I am here to tell you that you are not alone in trying to understand what artificial intelligence (AI) is and what it means to you in your daily life. I have developed countless AI applications and I still cannot produce a comprehensive definition. I have tried to define it so many times I have just given up! I have asked many software development professionals to tell me their definition of AI and what I have learned is their answers usually depend on when they were first introduced to it. The first time I became interested in AI was in college in the 80s. As a software engineer, I immediately thought AI was writing a computer program that could think and act like us. So, I started researching what was out there and soon realized that technology was nowhere near what the words imply. I became extremely interested after learning about artificial neural networks while getting my master’s degree I got excited again! Wow, artificial neural networks are like building an artificial brain. I soon realized that is not the case. The artificial neuron is a very crude representation of the neurons in our brain, and the learning mechanisms are slow, but that does not mean they are not useful. We have recently seen the explosion in deep learning and large language model (LLMs) (e.g., Generative pre-trained transformer, GPT) and have seen that these implementations are exceptionally good and in many cases are better than a human at some tasks. My point is what we call AI changes as the technology morphs. I could make the case that Charles Babages’s Analytical Engine designed in the 1800s is AI.

AI is here to Stay but our Definition of AI Is Not. New Technologies, Like Chatgpt, Will Continue to Change Our Understanding, And Someday, AI May not Feel Artificial at All, Just Like a Facetime Call with Someone Halfway Across the World Would have been seen as Magic 100 Years Ago and is now Perfectly Normal

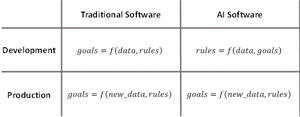

Now that we have that behind us, let us try to understand how AI can be applied. AI is algorithm, and as such, they are implemented in software and hardware. AI algorithms are fundamentally different than traditional algorithms because traditional algorithms transform data into desired goals, while AI algorithms transform data and desired goals into rules.

The development process is quite different but the implementation in production can be similar, although there is a host of new issues surrounding the maintenance of the models (rules) developed by AI algorithms. Development of traditional software is the manual creation of algorithms that create the desired outputs (goals).

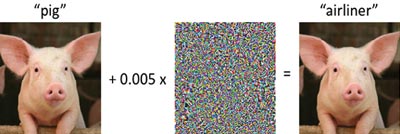

Development of AI software requires the development of algorithms that learn to produce rules based on the given data and desired goals. This gives the old adage “garbage in garbage out (GIGO)” a new twist. With AI it is not just GIGO but its “garbage in garbage learned (GIGL)” which is much harder to diagnose when something goes wrong. With traditional software you can perform comprehensive testing and ensure that your software performs good enough for its intended use. With AI software it learns to generalize, and that generalization can lead to issues in production. When given data similar to what is was trained on, it should perform quote well, but when unexpected data is introduced, the results can appear normal but be far outside what is intended. Traditional software will produce errors or warnings and it will be obvious the data is outside intended ranges, but for AI that is not the case. When errors are introduced in the data the outputs can be catastrophic.

So, when things go wrong and your pig cannot fly, what do you do? You try to explain why it failed and fix it, right? Well, that is the rub. The hard part of using AI approaches is the explainability issue and that has led to slowed adoption of AI for critical tasks that have severe consequences for errors or failures. The internal architecture of an AI model is so complex it is not explainable like traditional software bugs are. When you debug traditional software, you can find the lines of code that caused the output to be wrong and fix it. With AI, it is not so simple. You have to reevaluate (retrain) your model with examples it can learn so it discriminates the way you want it to. In general, the more training data you have, the better your AI will perform.

If you decide to use AI, you will very quickly learn that the quantity and quality of your data are most of the effort. It is so important that there is a plethora of tools for cleansing, validating, munging, tracking, balancing, morphing, and synthesizing data. Most companies quickly realize that their data is not organized or complete enough to begin an AI effort.

I would be remiss to now discuss the latest advances with GPT models, specifically ChatGPT developed by OpenAI. The holy grail of AI is artificial general intelligence (AGI) which is the ability of an intelligent agent to understand or learn any intellectual task that human beings or other animals can. If you interact with ChatGPT, you will be amazed at how fast it answers your questions with in-depth information and also lets you follow up will more questions while understanding the topic being discussed. Google searches are good, but each consecutive search does not consider what you asked it 5 seconds ago, each search is independent. The more you use ChatGPT the more you will start to see errors. It seems so brilliant one minute and the next it says something that you suspect as being false. You will soon learn that these approaches “hallucinate.” They are so determined to answer your question that they make stuff up! Why does it do that? Because it does not understand what it is saying, it is only predicting what the most probably next word should be, given the previous sequence of words. It is like when you know someone so well you can predict the next words they are going to say. There is a tremendous amount of work (and money) going into this technology and the hallucination problem is being solved, but again, the curation of the data used to feed these LLMs will be a topic of hot debate.

AI is here to stay but our definition of AI is not. New technologies, like ChatGPT, will continue to change our understanding, and someday, AI may not feel artificial at all, just like a Facetime call with someone halfway across the world would have been seen as magic 100 years ago and is now perfectly normal.